AI-Powered Application Development Guide in Education

AI-Powered Application Development Guide in Education presents a practical roadmap to build impactful edtech products. This content covers artificial intelligence-enhanced lesson experiences, personalized learning, learning analytics, and adaptive assessment with an end-to-end approach. We address generative AI, LLM-centric architectures, prompt engineering, retrieval augmented generation (RAG), data privacy, and ethics from an operational perspective.

1) Why now? Forces behind AI in education

As learning content rapidly digitizes, maturing generative AI tools enable institutions and startups to impact student outcomes and teacher productivity directly. LLM-based assistants scale workflows like assignment feedback, rubric-based grading, microlearning creation, and personalization.

Aligning business goals and technical needs

- Learning objective: Pace and close gaps per learner.

- Measurable metrics: Completion, quiz accuracy, engagement, NPS.

- Compliance & trust: Data handling aligned with GDPR/FERPA-like regimes.

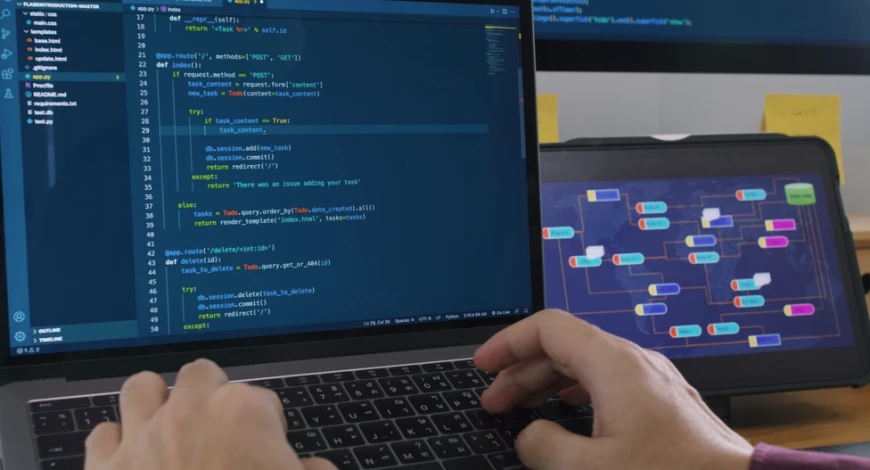

2) Architectural baseline: an LLM-centric reference

The dominant pattern is a RAG-backed LLM orchestration that safely injects institutional content into answer generation.

High-level reference architecture

- Client: Chat assistant, course assistant, content generator.

- API Layer: Orchestration, rate limiting, auth (OIDC), sessions.

- RAG Service: Ingestion, embeddings, vector search, citations.

- LLM Layer: Output formatting, prompt templates, guardrails.

- MLOps/Observability: prompt versioning, telemetry, error/latency.

- Data Store: Content metadata, profiles, policy enforcement.

3) Data strategy & compliance

Educational data is sensitive. Follow principles that ensure trust and sustainability:

- Minimize processed data to purpose only.

- Anonymize/pseudonymize for training.

- Consent and transparent policies.

- Classification and encryption, key management.

- Safe logging free of PII.

4) Model selection: a multi-model approach

- Low-latency models for rapid feedback.

- Long-context models for content generation.

- Multimodal for image/audio-based analyses.

- Cost optimization with mixing and caching.

5) Prompt engineering and system design

Predictable quality requires prompt templates with clear system roles, few-shot exemplars, and explicit evaluation criteria.

- Structured outputs (JSON schemas for rubrics, tags, suggestions).

- Critique passes (draft + review).

- Safety guardrails and mandatory citations.

- Localization for TR/EN with controlled terminology.

Boosting relevance with RAG

- Proper embeddings and chunking in vector DBs.

- Re-ranking and metadata filters (grade, subject).

- Citation display for trust.

6) Learning experience: personalization & microlearning

- Adaptive difficulty and targeted practice.

- Goal tracking and gamification.

- Diagnostic feedback via error patterns.

7) Assessment: rubrics & reliable measurements

- Automated grading + 10–20% human audit.

- Rationales and evidence trails.

- Fairness checks and bias mitigation.

8) Productization & MLOps

- Prompt versioning, A/B testing, canary releases.

- Performance: accuracy, relevance, latency, cost.

- Safety: toxicity, copyright, hallucination rate.

9) Sample 8-week MVP plan

- Weeks 1–2: Goals, personas, requirements, data inventory.

- Weeks 3–4: RAG prototype, prompt templates, safety policy.

- Weeks 5–6: UI/UX, chat flows, citation view, rubric module.

- Week 7: Pilot class, telemetry, fixes.

- Week 8: MVP launch, enablement, support.

10) Use cases

- Course assistant: Explanations, varied questions.

- Assignment feedback: Rubric-based, cited responses.

- Content generation: Micro content aligned to design.

- Academic integrity: Originality checks, source verification.

- Accessibility: TTS, summarization, plain language.

11) Best practices

- Start small and scale with real data.

- Transparency on AI’s role.

- Human oversight for critical decisions.

- Scalability planning.

- Cost control via caching and model choice.

12) Common pitfalls

- Skipping data preparation.

- No citations or evidence.

- Single-model dependency.

- Optimizing without measurement.

13) Tools & stack

- Vector: FAISS, pgvector, Pinecone, Weaviate.

- Orchestration: LangChain, LlamaIndex, function calling.

- Evaluation: LLM-as-a-judge, human labels.

- Safety: PII masking, moderation APIs.

- Analytics: event tracking, feature store, dashboards.

14) Conclusion

With the right data, robust architecture, safety, and measurement, AI-powered education apps produce sustainable value. This guide helps teams ship MVPs fast, maintain quality, and scale safely.

-

Gürkan Türkaslan

Gürkan Türkaslan

- 3 October 2025, 12:41:33

English

English